The first big question in any AI journey shouldn’t be, "What model should I use?" It's, "How should I build?"

Should you train your own model from scratch, customize an existing one, or skip retraining altogether and use something like RAG (Retrieval-Augmented Generation)? We've found that each path has its own tradeoffs when it comes to cost, control, speed, and performance. By the end of this blog, you should have a clearer sense of which route is right for your project and how to get started.

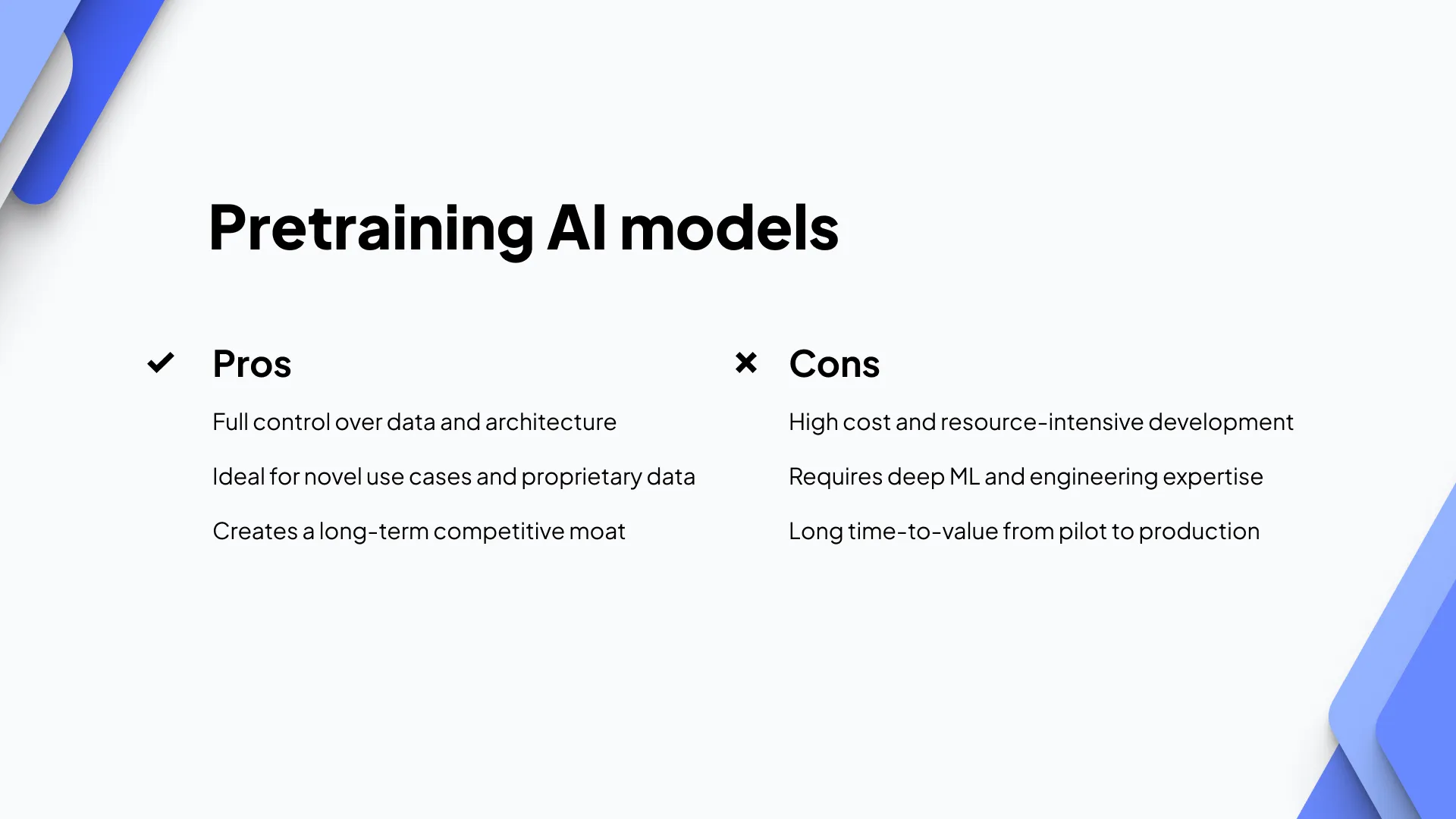

Pretraining vs. fine-tuning vs. RAG: breaking down the pros and cons

Before we dive deep, here's a high-level overview of how these three primary approaches to building AI stack up:

Pretraining: Build it from scratch

Pretraining means creating your own foundational model by training it on massive datasets from the ground up. When you choose this path, you get total control over architecture, data, and behavior. However, pre-training is very computationally expensive and requires huge amounts of data. You'll need deep ML expertise, a high-quality and diverse dataset, and access to serious GPU infrastructure.

Because [pretraining] is a very computationally expensive part, this only happens inside companies maybe once a year or once after multiple months.”

Andrej Karpathy

Co-founder of OpenAI, LLM intro video

The cost of training frontier AI models has grown by 2-3x per year. For example, OpenAI’s GPT-4 used an estimated $78 million worth of compute to train, while Google’s Gemini Ultra cost $191 million for compute. By 2027, the largest models may cost over $1 billion to train from scratch, according to recent research from Epoch AI.

Pre-training is essential when you're working with fundamentally new data or domains where existing models lack a foundational understanding. It establishes the core knowledge and capabilities of a model from scratch. It’s also a long road with high upfront investment and ongoing maintenance.

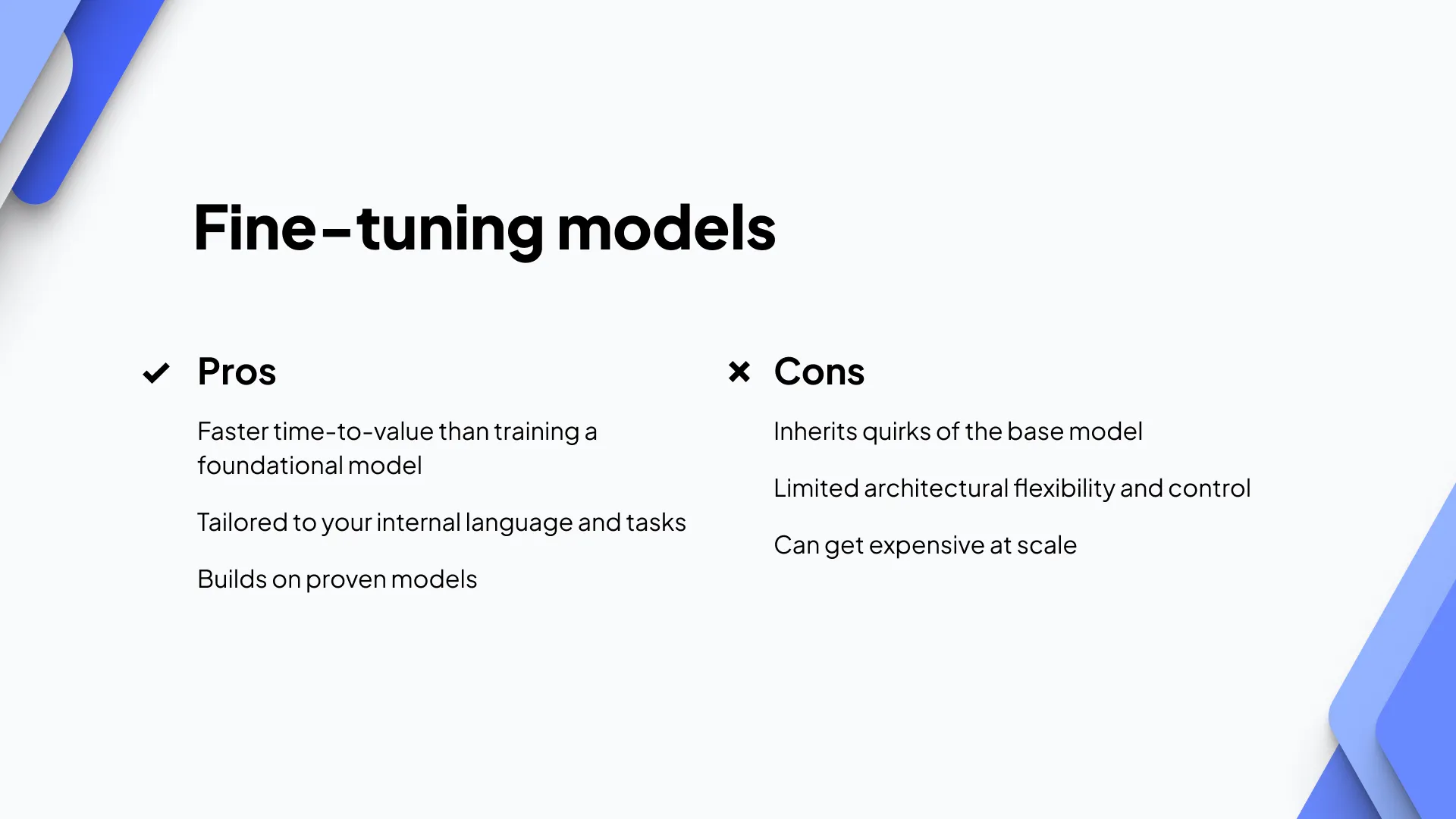

Fine-tuning: Specialize an existing model

Fine-tuning starts with a foundation model that's already been trained, like LLaMA or Mixtral. You then teach it your task or domain using a smaller, focused dataset. In doing so, you are extending the model's knowledge or improving its performance in specific areas using your dataset.

At best, fine-tuning can boost overall model performance and sharpen the model’s capabilities on specific tasks. At worst, fine-tuning can exacerbate the base model's limitations and biases, leading to drops in performance.

Even if you decide to train your own model, you will still need to go through rigorous fine-tuning. All successful models require continuous fine-tuning to introduce new information and stay relevant. It’s a repeated process, not just a one-and-done.

This is a popular choice for companies with niche data or workflows. It's significantly cheaper and faster than pretraining, but still gives you the power to tailor a model to your needs. The catch? You're building on top of someone else's training choices, which means inherited bias, limitations, or surprises, like substantial GPU memory requirements that can make the process expensive and resource-intensive.

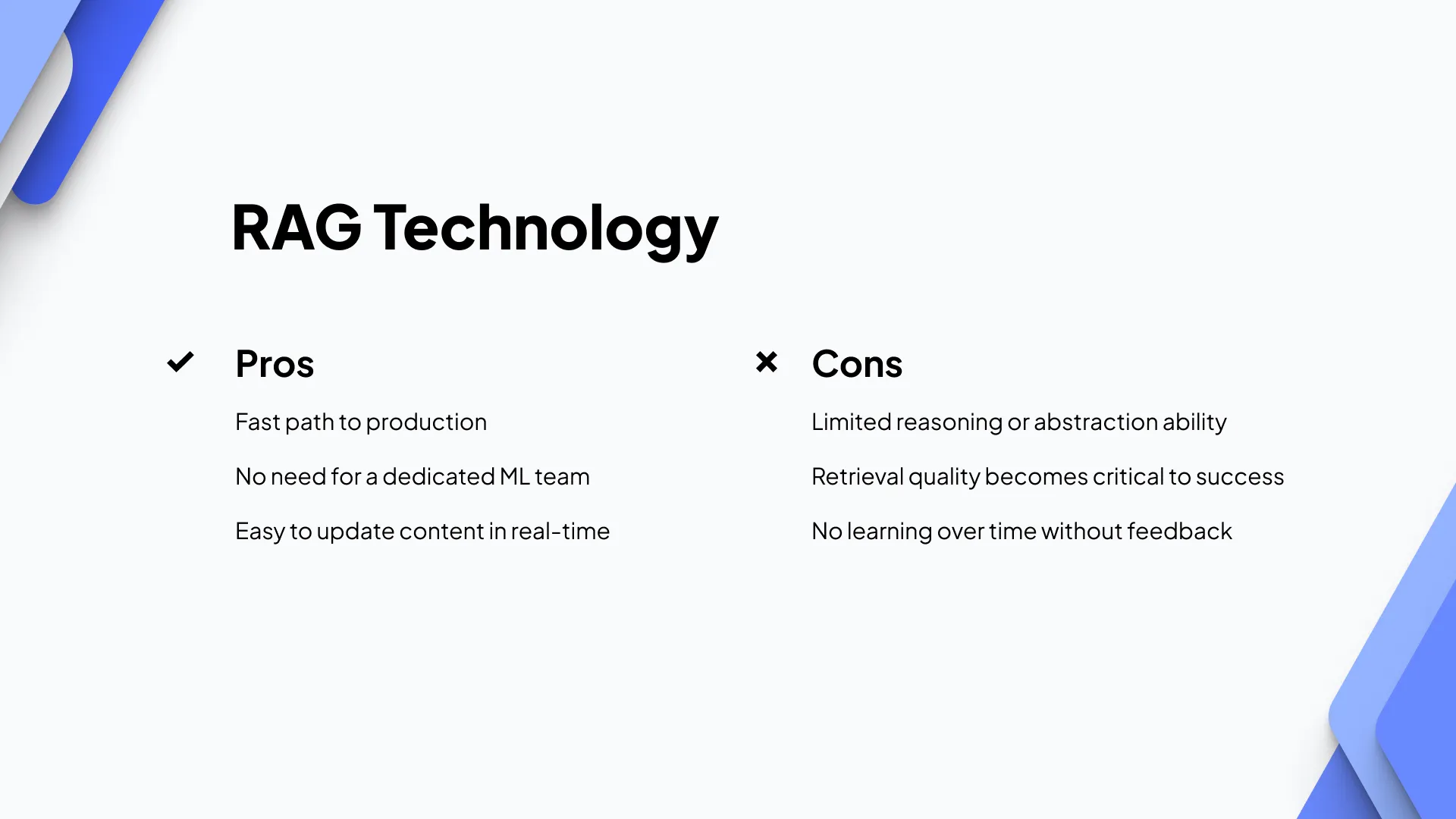

RAG: Retrieve, don’t retrain

RAG (Retrieval-Augmented Generation) is an increasingly common alternative to retraining. Instead of changing the model itself, you augment the prompt at inference time with relevant context from an external source, often a vector database of your own content.

RAG is ideal when you want your model to "know" things without actually updating its weights. It's fast, scalable, and avoids the complexities of fine-tuning. The key is building a robust retrieval pipeline that surfaces the most relevant information for each query.

What makes RAG particularly appealing is its flexibility. You can update your knowledge base in real time, experiment with different retrieval strategies, and even combine multiple data sources without touching the underlying model. This means you can iterate quickly based on user feedback and changing business needs.

Real-world scenarios: How teams actually choose

Let's look at how different industries are making these decisions in practice.

Financial services: When pretraining makes sense

Picture a hedge fund that wants to deploy a proprietary model for real-time trading decisions. Their data is highly confidential, and they require ultra-low-latency inference tuned to specific financial instruments. Pretraining gives them full control over data handling, model size, and performance characteristics, even though it means a multi-million-dollar investment and months of engineering effort.

What their stack might look like:

- NVIDIA H100 or Blackwell GPUs

- InfiniBand networking for ultra-fast model parallelism

- Flash-based storage for rapid checkpointing

- Completion time: 4 to 8 months

- Cost: $10M+

Biotech research: Finetuning’s sweet spot

We've seen pharmaceutical companies build internal chatbots to help R&D teams surface insights from decades of drug discovery data. They fine-tune Mixtral using their internal documentation, creating a model that understands specialized terminology and nuances in ways a generic model never could. The result? Faster experimentation, better collaboration, and preserved IP.

Their typical approach:

- NVIDIA A100s or L40S for fine-tuning runs

- 10K to 100K domain-specific documents

- Token-level filtering and alignment layers

- Completion time: 4 to 6 weeks

- Cost: Low to mid six figures

Enterprise IT: RAG for fast wins

Here's what makes RAG so appealing: it splits up responsibilities. The model handles language generation, but retrieval gets handled by external systems. Inference typically runs on small to mid-sized GPU clusters (or via APIs), while the retrieval pipeline is powered by a vector database.

What teams are building:

- API-accessible model (like GPT-4 or Claude) or self-hosted open-source LLM

- Vector database (Weaviate, Pinecone, etc.)

- Embedding generation pipeline

- Completion time: 1 to 2 weeks

- Cost: Low five figures (infrastructure + dev time + API/model fees)

Hybrid approach: The path of reality

Still unsure what approach is best for you? You’re not alone. In fact, most companies won’t pick just one approach.

You might start with RAG to get value fast, then fine-tune once you've identified gaps, and eventually consider pretraining if your use case demands total control.

Techniques like LoRA (Low-Rank Adaptation) and QLoRA have made fine-tuning more accessible by allowing lightweight parameter updates, while advanced RAG approaches like multi-hop retrieval, query decomposition, and retrieval with reranking are making knowledge integration more sophisticated.

What hybrid implementations look like:

- Start with RAG for immediate deployment and user feedback

- Layer in LoRA or QLoRA fine-tuning for domain-specific improvements

- Implement retrieval reranking and query decomposition for better context

- Consider full fine-tuning or pretraining for critical performance gaps

- Completion time: Iterative (weeks to months depending on complexity)

- Cost: Scales with sophistication, typically mid-five to low-six figures

Where does your AI strategy start?

Your starting point depends on what you're trying to solve. Need full control? Pretraining might be worth the investment. Want domain-specific intelligence quickly? Try fine-tuning. Need to ship yesterday? Start with RAG.

What's your biggest challenge right now: speed to market, data privacy, or something else entirely? The path you choose should solve your most pressing constraint first.

No matter where you are on your AI journey, CoreWeave can help. We've supported teams at every stage, from model training to inference to hybrid deployments.

Explore your infrastructure options:

- Learn about AI model training capabilities and how to make training more cost-effective and reliable

- Discover AI inference solutions for scaling your models in production

- Check out our GPU compute offerings to find the right hardware for your workload

Dive deeper into the technical details:

- Read about serving inference faster with infrastructure that scales securely

- See how CoreWeave achieves 20% higher GPU cluster performance for AI training workloads

Get hands-on:

- Browse our technical documentation for fine-tuning models with CoreWeave

- Explore inference guides and walkthroughs

Questions about which approach is right for your team? Contact our AI specialists to discuss your specific use case and get personalized recommendations.

%20(1).jpg)

.avif)

.jpeg)

.jpeg)

.jpg)