What is MLPerf?

MLPerf is an industry-standard benchmark suite developed by MLCommons to measure and compare machine learning training and inference performance across hardware and platforms.

Why are MLPerf benchmarks important?

MLPerf benchmarks provide a fair, transparent method for evaluating AI hardware and cloud platforms, helping businesses choose solutions that offer optimal performance, scalability, and cost efficiency.

What does MLPerf Training v5.0 measure?

MLPerf v5.0 Training measures how quickly a computing system can train complex machine learning models, like Meta’s Llama 3.1 405B, from initialization to a specified quality target, enabling fair and transparent performance comparisons across hardware platforms and cloud providers.

What does MLPerf Inference v5.0 measure?

MLPerf Inference v5.0 measures how quickly computing systems process inputs and generate outputs using fully trained machine learning models, focusing specifically on throughput (tokens per second) and latency across realistic deployment scenarios to evaluate and compare the inference performance of hardware and cloud infrastructure providers.

How does CoreWeave's performance compare in MLPerf benchmarks?

CoreWeave consistently leads MLPerf benchmarks, delivering record-setting performance in both training and inference, significantly outperforming traditional GPUs like NVIDIA H100 and H200.

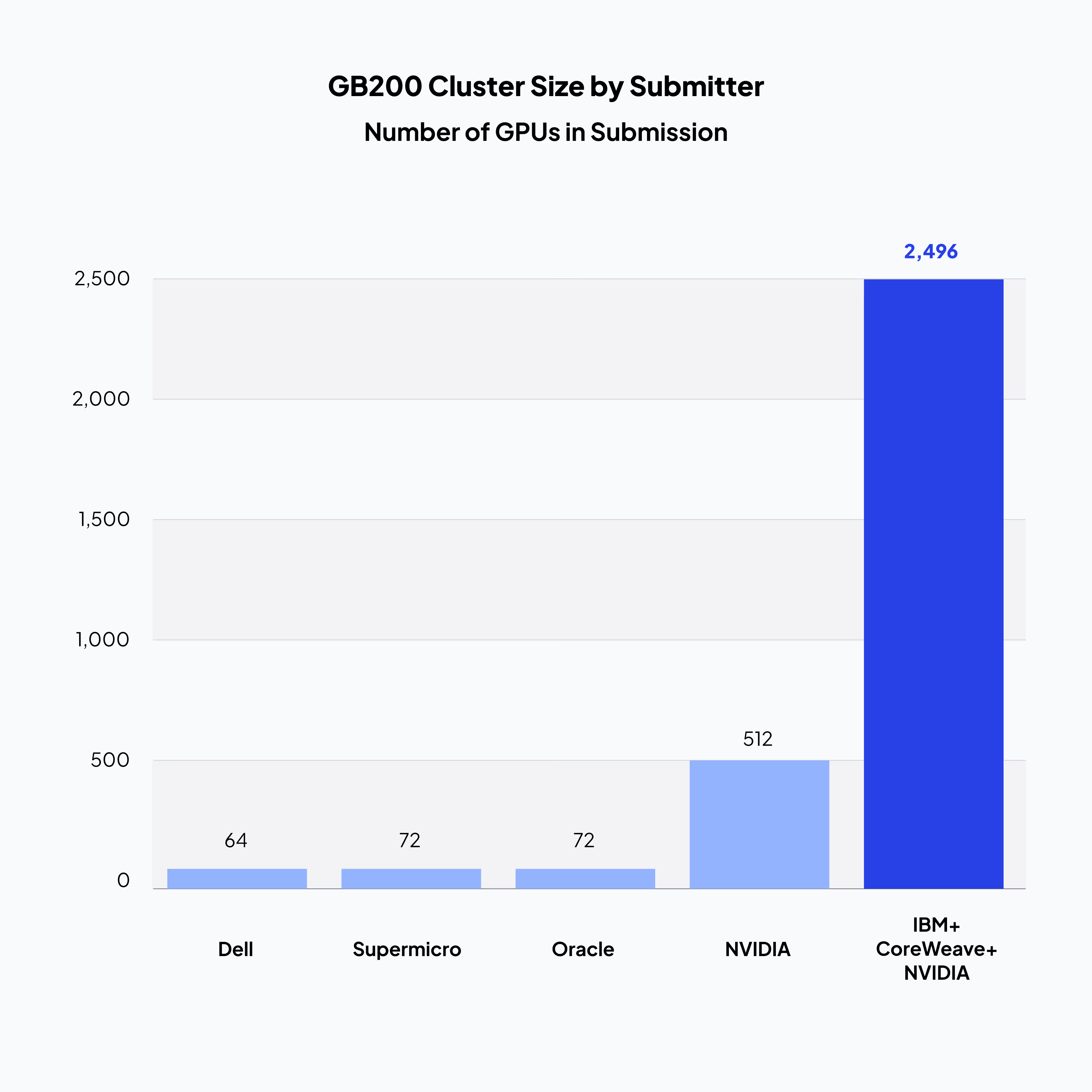

What makes CoreWeave GPUs unique for MLPerf results?

CoreWeave leverages the latest NVIDIA GB200 GPUs, combined with optimized infrastructure and purpose-built cloud platform, to achieve unmatched scale, scalability, and inference and training performance.

How can businesses benefit from CoreWeave’s MLPerf results?

Businesses using CoreWeave benefit from faster deployment cycles, reduced infrastructure costs, optimized GPU utilization, and enhanced competitive advantage through superior AI performance.